Information technology has greatly benefited the agriculture sector. You’ve probably read multiple articles that say ‘mobile phones empower farmers,’ but I wanted to ask the farmers themselves. Last year, I conducted a research on how rural farmers in Tanzania were using Tigo Kilimo. To learn why farmers were using this SMS based agricultural service, I spoke to 10 farmers who use the service and 5 farmers who did not.

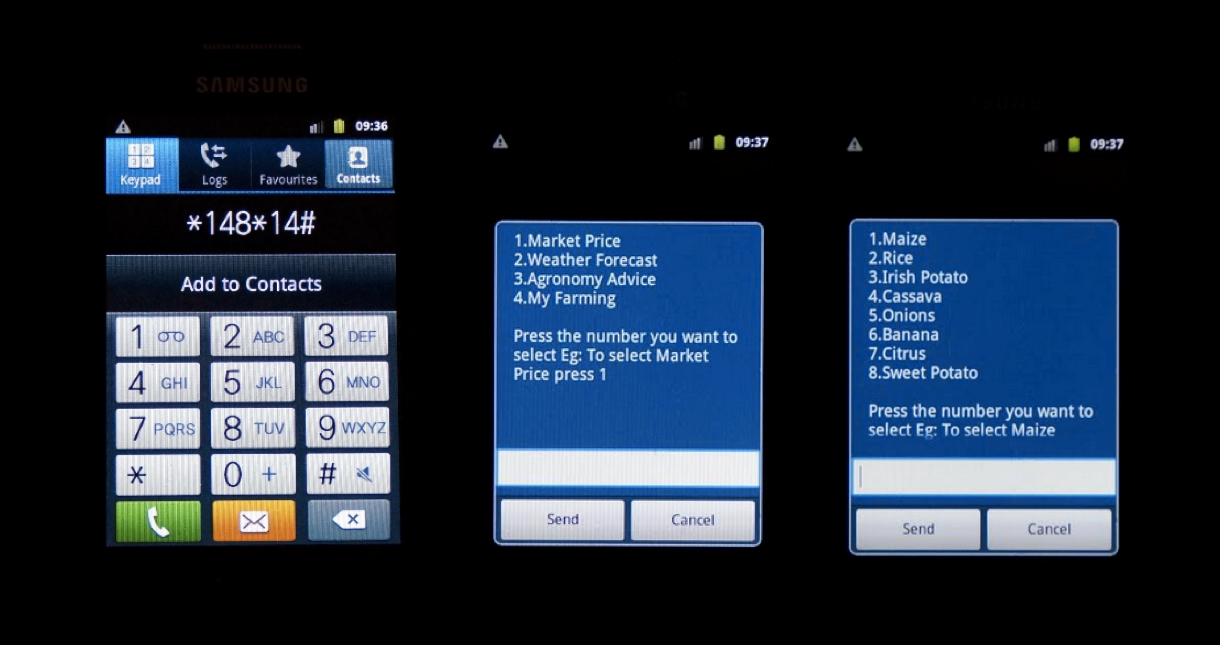

How does Tigo Kilimo work?

Tigo, one of the most popular mobile network operators in Tanzania, launched their mAgri service Tigo Kilimo in April 2012. Tigo Kilimo is an SMS-based value added service that provides information about weather, crop prices, and agronomy techniques to small-scale farmers. By October 2013, Tigo Kilimo already had over 40,000 subscribers. Users access the service by dialing *148*14#. After completing registration, which is free, one can consult a USSD (Unstructured Supplementary Service Data) menu and select the desired information. An SMS containing the required information is subsequently sent to the customer. Tigo now offers free and unlimited access to all kinds of agricultural information to its customers.

So, why are farmers in Tanzania using Tigo Kilimo?

1. Access to information increases productivity

Access to relevant and up-to-date information is crucial for farmers to plan farming activities and to choose which kind of crops to grow. Respondents requested information via Tigo Kilimo in particular during the cropping season. Short and long term information on weather is one of the most important information in the producing stage. Farmers risk loss without appropriate weather predictions, for example, when seeding too early or choosing unsuitable crops. Combining weather information and agronomy advice provided by Tigo Kilimo enabled farmers to increase their overall productivity.

2. Improves the welfare of farmers and their families

Farmers limit their revenue when selling their crops to middlemen and customers without timely market price information. The use of Tigo Kilimo has reduced the cost of market price research: farmers obtain prices for different crops in different markets and can strategically decide what to grow and where to sell. Market prices received through Tigo Kilimo serve as a point of reference for negotiating. Farmers are still willing to bargain with buyers but cannot be fooled by customers or middlemen.

Generated surpluses were saved or used for family issues and household needs, thereby increasing security and stability towards unpredictable external events. Additional income was invested in education as well as in the expansion of economic activities.

3. Empowers female farmers

Female respondents stated that Tigo Kilimo helped them to gain not only financial independence but also knowledge acquisition. Since most agricultural information and extension services are targeted at male farmers, women happen to lack valuable agricultural information. Domestic workload reduces the time to research the required information. Tigo Kilimo allows women to request and read agricultural information whenever and wherever they want. However, it is important to remember that the effective use of Tigo Kilimo requires regular access to a mobile phone, which is less likely among women.

4. The benefit extends to the community

All of the farmers I spoke to stated that they shared information obtained through Tigo Kilimo with others in the community. The acquired knowledge is shared with community members such as neighbors, family members and friends who cannot access Tigo Kilimo due to lack of education or income. Information is discussed during group meetings, which encourages knowledge exchange. Tigo Kilimo can improve the social capital of a community since additional knowledge is transmitted by “infomediaries” to community members without access to it. Tigo Kilimo users also promote the service among their social network, convincing non-users to subscribe to the service.

What are farmers’ suggestions for mAgri services like Tigo Kilimo?

- Include farmers in the design process: Including farmers in the designing process of mAgri services would help to identify farmers’ needs. The indigenous knowledge from farmers into the service would help to better serve the community by providing information on the relevant crops.

- Simplify the subscription process: Some of the farmers had heard about Tigo Kilimo but didn’t use it because they failed to subscribe. If the subscription process was simpler, they would also benefit from the service.

- Expand their marketing to rural areas: Some of the farmers had never heard of services like Tigo Kilimo. By advertising their services to more farmers, Tigo Kilimo could really benefit more farmers.

While Tigo Kilimo’s reach may be restrained by hurdles like poor road infrastructure, the unavailability of farming inputs, lack of access to mobile devices, its benefits are undeniable. Tigo Kilimo has provided many farmers with relevant and up to date agricultural information, positively influencing their agility, security, and income.

What has your experience been with mAgri services? Do you know of other mAgri services that are benefiting farming communities? Tweet at us @TechChange or comment below.

Interested in learning about other ways mobile phones are being used in international development? Sign up for our Mobiles for International Development course that begins early next year!

About author

Sophie is an alumna of TechChange’s Mobiles for International Development course. She is an Entrepreneur in Residence in the field of product management for a Berlin internet startup. Sophie arrived in the Berlin startup scene three years ago and is very passionate about business solutions that make the world a better place. She loves Kiswahili and has been staying in Kenya and Tanzania for more one year where she is exploring the local (social) startup scene there. She has been working as product manager for an e-commerce company in Berlin. Sophie is about to get her Bachelors’ degree in African Studies and Economics with a thesis focusing on the impact of a mobile-based agricultural information service on rural livelihoods in Tanzania.