In 2015, TechChange launched the Technology for Monitoring & Evaluation Diploma Program, which combined three TechChange courses (Tech for M&E, Tech for Data Collection and Survey Design, Tech for Data Visualization) into one comprehensive program. The program was meant to give busy working professionals a robust foundation in technology for M&E through the three core courses as well as workshops and office hours with course facilitators. Our first cohort is finishing up the program as we begin 2016, and a new session will launch on January 25.

Today we are very excited to chat with Sonja Schmidt, the Senior M&E Advisor to JSI’s AIDSFree project, who is one of the first participants to complete the Technology for Monitoring & Evaluation Diploma Program: Working Professionals Experience. She discusses her experience with the overall program, how each course influenced her work, as well as how she was able to better understand the use of ICTs in M&E.

How did you come across the Tech for M&E Diploma Program?

A colleague of mine from JSI had sent around some links for TechChange courses. When I clicked the links I noticed the Diploma Program, and thought that this would be a good option to take advantage of the three courses in order to get a wider foundation on the topic.

Have you taken online courses before? Did the program meet your expectations?

I had never taken an online course before, so this was a very new experience for me. I found it challenging in the beginning, particularly with the first course that I took, because I initially felt overwhelmed and struggled a bit with learning how to move around the platform and managing the material.

That being said, the program far exceeded my expectations. I have to compliment TechChange because, being an M&E expert, I look at most material with a critical eye, but I found that the material that was put together and all of the speakers/guest experts were stellar. I was also quite pleasantly surprised by the group dynamics present on the platform. I did not expect this from a virtual group, but in the end there were names that kept popping up, and I actually had the chance to meet someone from the course in person – I am almost said that it has ended.

Are you new to the field of M&E? If not, why did you think this would be valuable to your career?

I have many years of experience in the M&E field. Despite this fact, I realized that the concept of ICT and M&E emerged on the scene pretty suddenly – it did not really exist as an articulated concept even as recently as 3 years ago. I remember meeting someone a few years back who had created his own company around an app meant to improve data collection for surveys, and was surprised because I never thought that that would take off. Now, several years later I find it fascinating how this has become mainstream.

So, my main reason for taking the program was to learn more about this new and rapidly changing field, the intersection of technology and monitoring & evaluation, and get a better grasp of it.

How have you been able to use what you learned in the courses in your work, and how has the program overall been helpful to you?

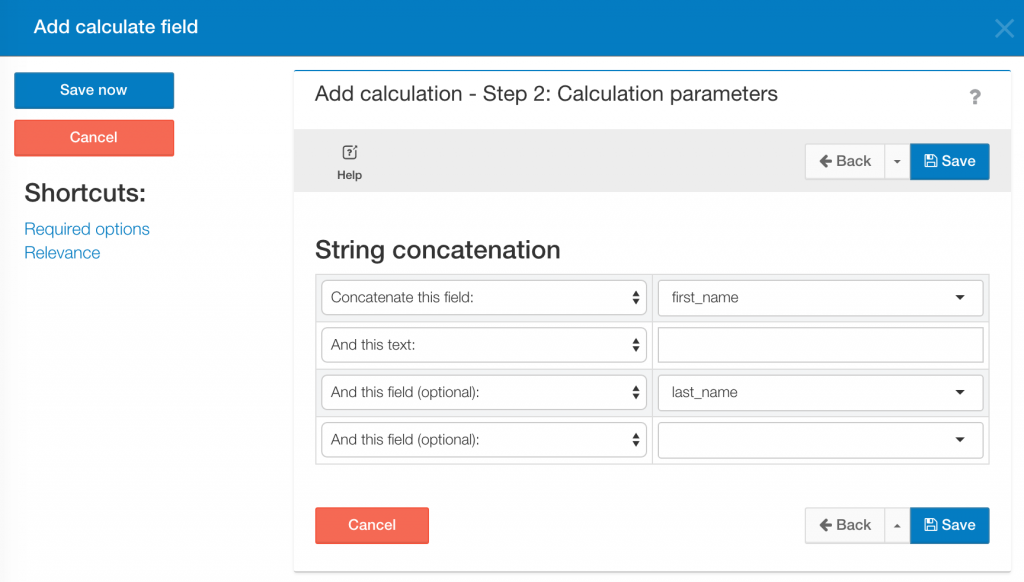

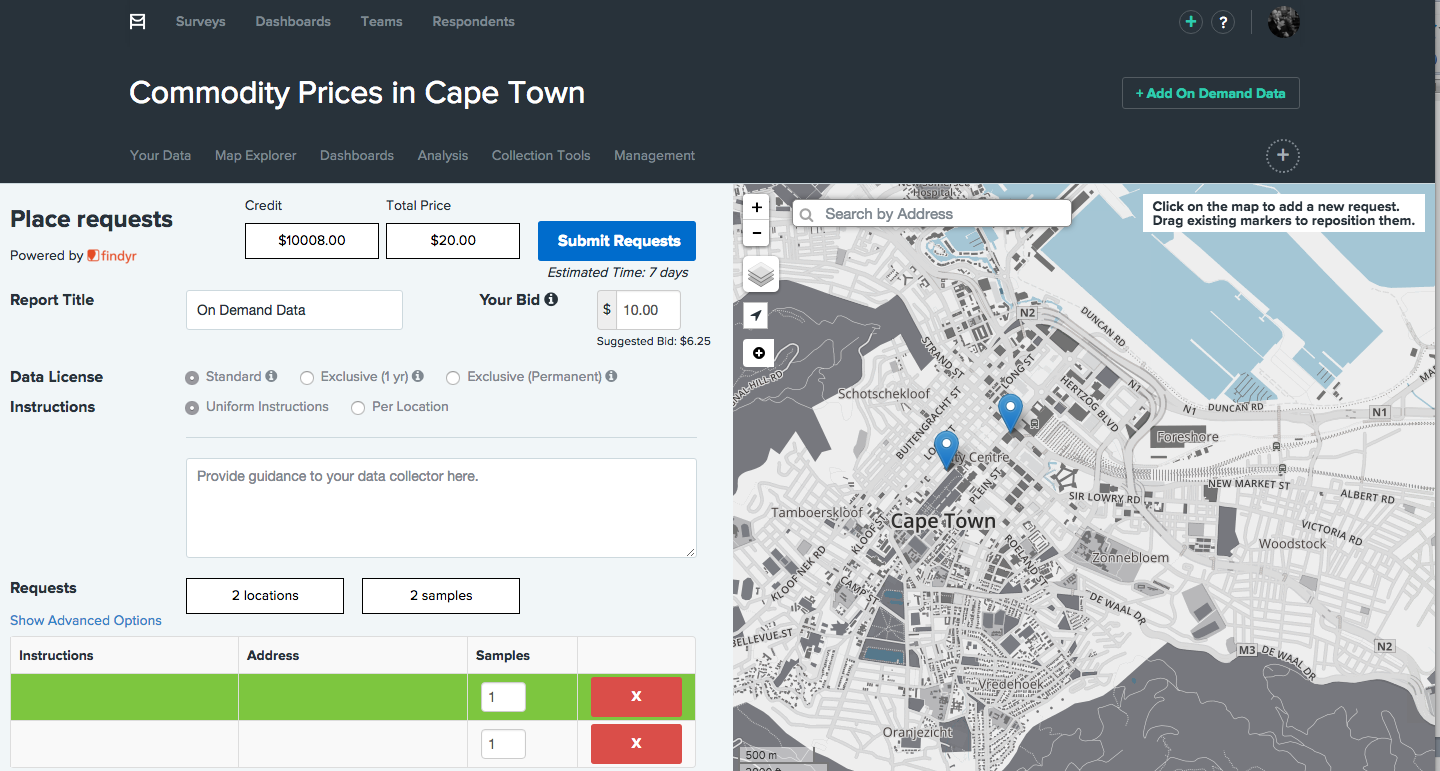

I have definitely been able to use what I learned in the courses, and the Diploma Program, as foundations for my work. The Technology for M&E course, while a bit repetitive for me sometimes, as I’m an experienced M&E professional, still provided me with exposure to new materials as well as to other people’s perspectives and approaches. The Technology for Data Collection & Survey Design course was not as applicable to my personal work, however it did improve my capacities as an M&E advisor in terms of being able to recommend methods or software, or considerations to take into account, to in-country M&E folks who might be the ones actually designing M&E programs themselves. The Technology for Data Visualization course is the one that had the most impact on my work directly, because a big part of my work is reporting to stakeholders and presenting data. The Introduction to Excel for Data Visualization course was also extremely helpful because it is a familiar software, and Excel is something that I will always use; especially for organizations that do not have much funding, Excel is a very powerful and useful tool.

In general, I think the courses were useful in my work in that when I come across a particular issue, I can now think in a way where I ask myself how I can improve or do something better. I can then go back to the material and target specific areas and continue to use the program material as a tool for learning in my work. I am also currently working on developing a training in Tanzania on data quality, and I plan to discuss with my colleagues ways to use, for example, phones to more quickly submit data from site facilities to our central office.

Interested in the TechChange Technology for Monitoring & Evaluation Diploma Program? Get more information and apply here. Enrollment is open and on-going, but our next batch of courses begins January 25, 2016. It is still not too late to sign up and join this amazing program with participants from all corners of the globe!

About Sonja

Sonja has over 15 years of experience in international public health, with a focus on infectious diseases, including TB, HIV/AIDS and immunization programs. She has long-term country experience in Bangladesh, India, Nepal and Ethiopia and has worked for several UN organizations (UNIFEM, UNICEF, WHO) and numerous USAID-funded projects. Currently as the Senior M&E Advisor to JSI’s AIDSFree project, she oversees and coordinates the monitoring and evaluation of the project and guides country projects in M&E planning, data quality assessment, data analysis and use. Sonja has an MA in medical anthropology and an MPH with a focus on policy and management.